Game-Changing Efficiency

for the Future of Computing

Game-Changing Efficiency

for the Future of Computing

Signaloid's UxHw® technology allows a single execution of a program to evaluate a distribution of inputs and output a distribution result. Achieve speedups of over 1000x for stochastic workloads common in AI, quantitative finance, robotics, and more.

Simpler Code and Faster Execution:

Quantitative risk and derivatives pricing workloads in finance

Monte Carlo simulations in engineering

Reinforcement learning and Bayesian methods in AI/ML

Particle and Kalman filters in robotics and embodied AI

Use Cases

Quantitative Finance

"Our VaR calculations take hours and cost a fortune."

Accelerate risk calculations and derivatives pricing by over 400x.

Quantitative Finance

"Our VaR calculations take hours and cost a fortune."

Accelerate risk calculations and derivatives pricing by over 400x.

Quantitative Finance

"Our VaR calculations take hours and cost a fortune."

Accelerate risk calculations and derivatives pricing by over 400x.

Digital Banking

"Our clients want interactive multi-scenario modeling, not one-off results from an analyst."

Enable interactive what-if analysis that responds in milliseconds.

Digital Banking

"Our clients want interactive multi-scenario modeling, not one-off results from an analyst."

Enable interactive what-if analysis that responds in milliseconds.

Digital Banking

"Our clients want interactive multi-scenario modeling, not one-off results from an analyst."

Enable interactive what-if analysis that responds in milliseconds.

Machine Learning and AI

"We need to know when we can trust our AI/ML model predictions."

Add calibrated uncertainty to any ML inference pipeline.

Machine Learning and AI

"We need to know when we can trust our AI/ML model predictions."

Add calibrated uncertainty to any ML inference pipeline.

Machine Learning and AI

"We need to know when we can trust our AI/ML model predictions."

Add calibrated uncertainty to any ML inference pipeline.

Robotics and Automotive

"We need easier ways to achieve high-performance LiDAR particle filters on our edge GPU hardware."

Simplify state estimation with automatic uncertainty propagation.

Robotics and Automotive

"We need easier ways to achieve high-performance LiDAR particle filters on our edge GPU hardware."

Simplify state estimation with automatic uncertainty propagation.

Robotics and Automotive

"We need easier ways to achieve high-performance LiDAR particle filters on our edge GPU hardware."

Simplify state estimation with automatic uncertainty propagation.

Industrial Automation

"We can't trust AI/ML predictions enough to deploy them on the factory floor."

Make AI transparent with real-time confidence quantification.

Industrial Automation

"We can't trust AI/ML predictions enough to deploy them on the factory floor."

Make AI transparent with real-time confidence quantification.

Industrial Automation

"We can't trust AI/ML predictions enough to deploy them on the factory floor."

Make AI transparent with real-time confidence quantification.

Engineering Design

"We run thousands of simulations to understand design margins."

Get full uncertainty quantification in a single simulation pass.

Engineering Design

"We run thousands of simulations to understand design margins."

Get full uncertainty quantification in a single simulation pass.

Engineering Design

"We run thousands of simulations to understand design margins."

Get full uncertainty quantification in a single simulation pass.

Supply Chain Planning

"We need a compute platform to ease implementing range-based and probabilistic forecasting."

Replace fragile point estimates with robust probabilistic planning.

Supply Chain Planning

"We need a compute platform to ease implementing range-based and probabilistic forecasting."

Replace fragile point estimates with robust probabilistic planning.

Supply Chain Planning

"We need a compute platform to ease implementing range-based and probabilistic forecasting."

Replace fragile point estimates with robust probabilistic planning.

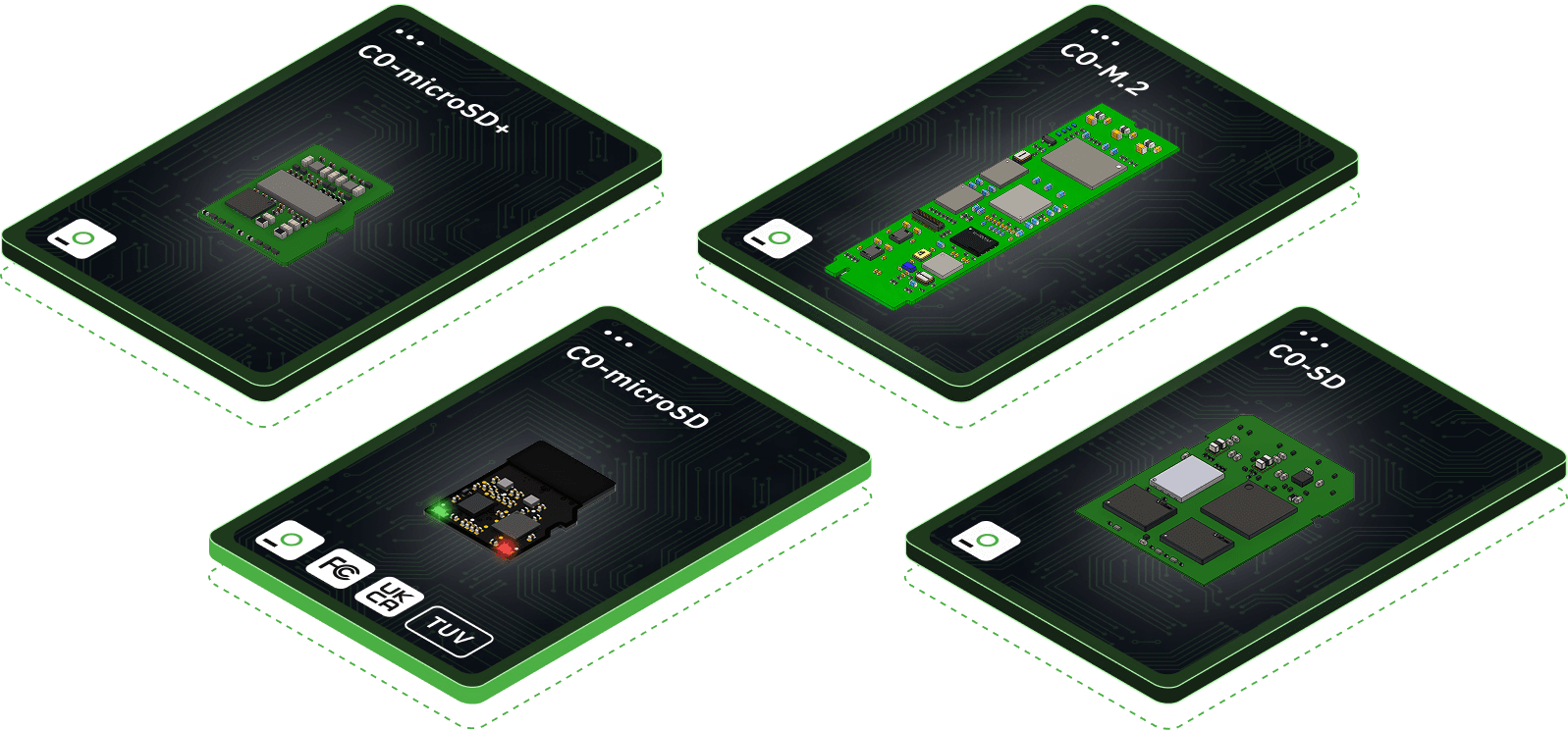

Deployment Options

Our Partners

Signaloid is the Next Frontier in Computing

Stochastic Workloads are Key to Future of Computing

Signaloid's UxHw® technology uses deterministic computation on probability distributions to enable orders of magnitude speedup and lower implementation cost for stochastic workloads common in AI, quantitative finance, robotics, industrial automation, and more.

Stochastic Workloads are Key to Future of Computing

Signaloid's UxHw® technology uses deterministic computation on probability distributions to enable orders of magnitude speedup and lower implementation cost for stochastic workloads common in AI, quantitative finance, robotics, industrial automation, and more.

Stochastic Workloads are Key to Future of Computing

Signaloid's UxHw® technology uses deterministic computation on probability distributions to enable orders of magnitude speedup and lower implementation cost for stochastic workloads common in AI, quantitative finance, robotics, industrial automation, and more.

Cloud, On-Premises, and Edge-Hardware Deployment

Deploy your applications to benefit from UxHw® technology via the Signaloid Cloud Compute Engine cloud-based task execution platform, deploy UxHw® on-premises, our use our hardware modules in edge-of-network deployments.

Cloud, On-Premises, and Edge-Hardware Deployment

Deploy your applications to benefit from UxHw® technology via the Signaloid Cloud Compute Engine cloud-based task execution platform, deploy UxHw® on-premises, our use our hardware modules in edge-of-network deployments.

Cloud, On-Premises, and Edge-Hardware Deployment

Deploy your applications to benefit from UxHw® technology via the Signaloid Cloud Compute Engine cloud-based task execution platform, deploy UxHw® on-premises, our use our hardware modules in edge-of-network deployments.

Easy Adoption, Consistent Abstraction, Huge Speedups

UxHw® gives applications the abstraction of a processor whose data can be annotated with probability distributions and which handles correlations as computation proceeds. Achieve speedups of 1000x or more in key stochastic workloads.

Easy Adoption, Consistent Abstraction, Huge Speedups

UxHw® gives applications the abstraction of a processor whose data can be annotated with probability distributions and which handles correlations as computation proceeds. Achieve speedups of 1000x or more in key stochastic workloads.

Easy Adoption, Consistent Abstraction, Huge Speedups

UxHw® gives applications the abstraction of a processor whose data can be annotated with probability distributions and which handles correlations as computation proceeds. Achieve speedups of 1000x or more in key stochastic workloads.